Foundation Models framework — Apple's Stealth Edge-AI Play

The most exciting thing announced at WWDC25 will bring hidden super-powers to your app.

We just wrapped up a #WWDC25 private lab session with a member of the Apple on-device Foundation Models framework team a few moments ago.

illumineX had been working on a use case for a client that had a missing puzzle piece, just about the size and shape of an on-device Foundation Model.

Drop it in!

What Sort of Tool is the Foundation Model?

Part of a family of models under development by Apple for several years, the on-device model is small but mighty. At 3 billion parameters, its compact architecture allows it to outperform models over twice its size for many tasks.

This document provides a detailed overview of the system architecture and Apple's approach to training.

Updates to Apple's On-Device and Server Foundation Language Models

One fascinating thing is that Apple has developed an architecture that allows them to rapidly train the model, facilitating continuous improvement to the Foundation Model itself.

Other features include:

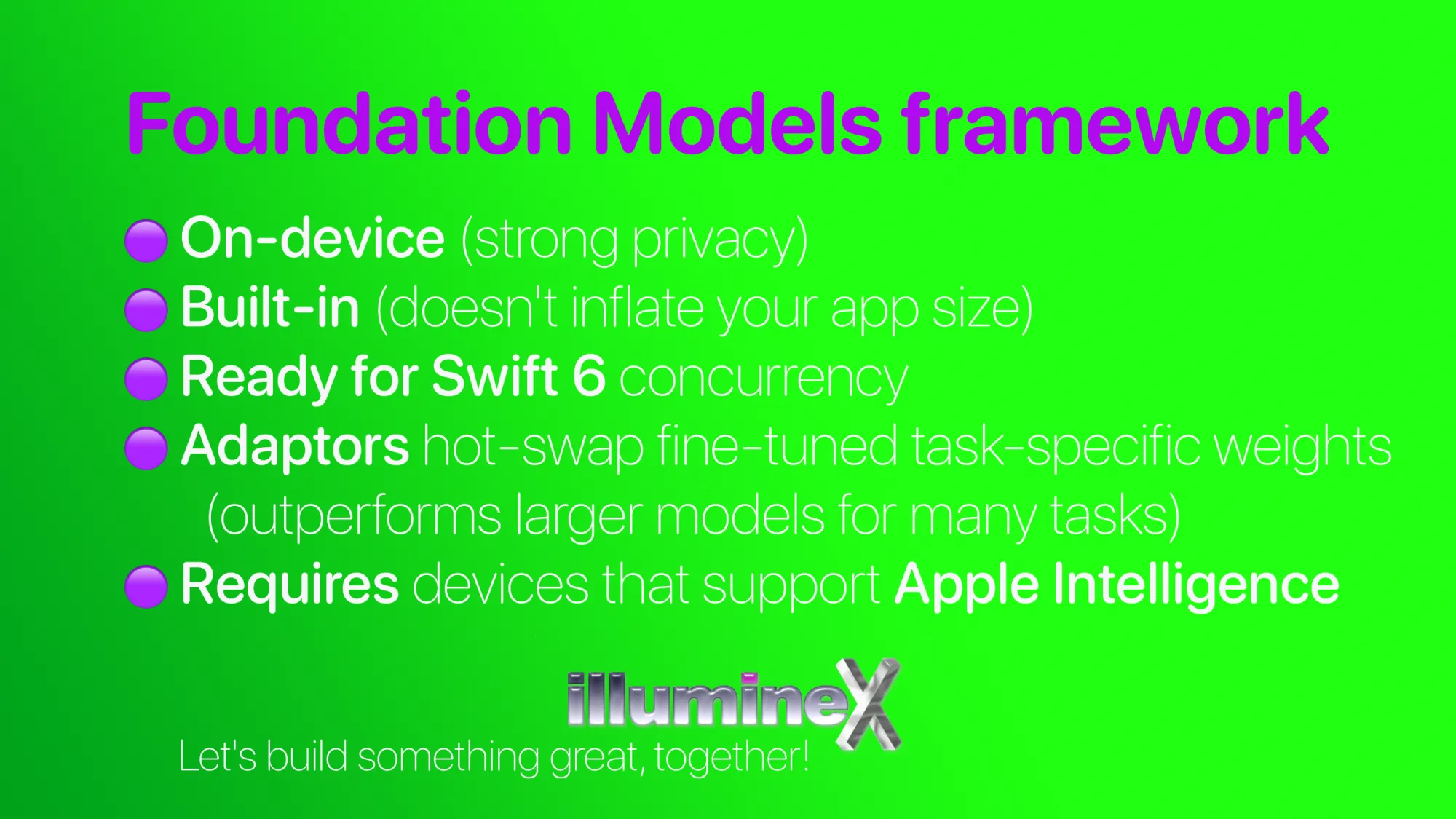

🟣 On-device Foundation Model (strong privacy)

🟣 Built-in (doesn't inflate your app size)

🟣 Ready for Swift 6 concurrency (can dynamically load a view as responses come back from the model, rather than waiting until the very end)

🟣 Adaptors hot-swap fine-tuned task-specific weights (Apple's Foundation Model outperforms larger models for many tasks)

🟣 Requires devices that support Apple Intelligence (some of your user community won't have these newer devices, yet, so you'll want to make sure your product has a fall-back strategy).

🟣 Requires macOS26, iOS26, iPadOS26

For additional detail, see this discussion at Apple Machine Learning Research.

Introducing Apple’s On-Device and Server Foundation Models

Pick the Right Problem for the Solution

It might seem backwards, to pick the right problem for the tool at hand, but there are a great many problems in the world that lack great solutions, today.

You've got a stack of problems and opportunities in your app, and some of those problems might well yield to Foundation Models framework!

The key to success for using Foundation Models (or most any new technology) is to figure out what they're good at, and apply them to problems that have some tolerance for error.

Output from an LLM is non-deterministic — if you ask exactly the same question the answer you get might be equally "correct" but it won't be "exactly the same".

Of course, there's also a nontrivial chance of getting answers that are completely *wrong* or even just nonsense.

A good strategy for interacting with such a system to tailor your queries to be as clear and concise as possible, so that the model isn't accidentally led astray by extraneous information in the query.

Foundation Models Can Be Obstinate (It's a Security Feature!)

Models can also sometimes refuse to answer your query, at all! (Returning an error, instead of an answer.)

Why would *that* happen?

Well, the system prompt (or equivalent) for most LLMs, to which *your* prompt is appended, includes instructions that are designed to be "guard rails" to prevent the system from doing naughty things.

There are other elements of some LLMs which might prevent the model from even being allowed to *parse* your input.

Consider a use-case where your query is constructed dynamically from the contents of a web page visited by your product's end user.

If the site they visit has been compromised, you might accidentally gather up some input that's an attempt at a prompt injection attack, and then feed that into the model — without you or your user even being aware.

There's quite a bit of "bad press" about the delay in Siri, but my team are excited about bringing on-device Foundation Model features to products for our clients.

Apple: a Stealth AI Leader

Looking at the quality of the *visible* Apple work in AI should leave one with the impression that the company is already a more serious player in the field than what they're given credit for in the tech blogosphere.

(Current production Siri isn't a Foundation Model (yet!) and isn't an example of machine learning nor "AI" work, by the way; the Siri architecture predates these technologies by several years, it's more like a VRU (voice response unit) call tree with a few voice recognition and speech synthesis tricks welded on).

Consider Apple's Private Cloud Compute architecture as one example, and several of Apple Machine Learning research papers as others.

Below is a link to the intro session for the Foundation Models framework. It's an accessible intro to the on-device Foundation model (3 billion parameters) that will be available for developers to use on devices that support Apple Intelligence on 26 operating systems.

Would you like help evaluating your app and feature backlog to see if there are opportunities to delight your users with Foundation Models framework?

Contact me if you're wondering if your app can provide a better user experience or a new capability by tapping into the power of Apple's on-device Foundation Model framework.